You may or may not be familiar with the laziness doctrine of computing, but it’s something I’ve been mentioning in my classes for years. It gets summoned when the aim is to promote the counterculture side of programming. Usually, it goes something like, “Laziness is at the heart of programming, and programmers are some of the laziest people out there. For instance, we will spend a whole weekend coding a solution that shaves a few seconds off of a routine task we have to complete every day.”

This theory of enlightened laziness has been a mainstay in computing for many, many years. In a way, it has been at the center of many advancements. For example, the Trojan Room coffee pot inspired the first webcam, and it was built to monitor the coffee pot level in the Computing Laboratory at the University of Cambridge.

However, with the advent of AI-assisted coding, this age-old doctrine has a new context – one that can be vexing for teachers and young learners. During a recent lesson on working with Python variables, I asked my students to get input from the user and make a Madlib using variables and print commands. This is a time-tested lesson that teaches some really great input/output fundamentals while also being pretty fun.

Students were working diligently in my lab when one student finished almost immediately. He admitted quickly that he used Replit’s Ghostwriter AI-assist to write the code and the story for him. Our ensuing exchange was closely watched by all the other students in the class, many of whom would have preferred to also use the Ghostwriter themselves.

My position was that using AI to code as a learner is akin to cheating, and it robs the young learner of the experience of writing code. The student responded by bringing up the laziness doctrine that I had mentioned a few weeks prior. If programmers are indeed lazy, wouldn’t AI be within his right to use in this case?

I have to admit I was a bit stumped by this one. On one hand, I knew I was right. Young programmers need to know how to produce clean and efficient code before they can start using Large Language Models to write code for them. However, I also realized he was right too. If anything, the laziness doctrine would have to be updated for the advent of machine learning.

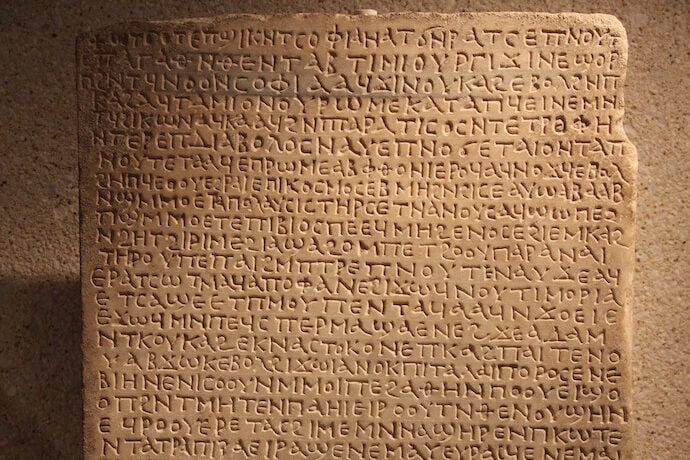

After doing some research, I found that this isn’t a new tension in the world of information technology—not by a long shot. In the Ancient Greek text Phaedrus, Plato lets us know that Socrates felt the same way about writing.

“For this invention [writing] will produce forgetfulness in the minds of those who learn to use it, because they will not practice their memory. Their trust in writing, produced by external characters which are no part of themselves, will discourage the use of their own memory within them… and will therefore seem to know many things, when they are for the most part ignorant…since they are not wise, but only appear wise.”

What Socrates feels about writing seems to apply to my conundrum with Python. Does the use of LLMs to generate code inhibit learning? At what point does a new technology simply supplant an earlier and outmoded one? Are our fears of an ignorant generation of learners unfounded? These are indeed large questions that neither Socrates nor any teachers I know have truly figured out.

However, it is indeed worth noting that we would only know Socrates’ true beliefs on this issue because his young disciple Plato decided to write them down – a rather bittersweet irony.

In my case, I did make the student rewrite the code without Ghostwriter. That said, I did concede the point about the laziness doctrine though. I don’t imagine I will be making that statement as loudly and boldly as I had in the past. There are limits to laziness (at least for the time being) in my 8th-grade classes.

LLMs are impressive, but learning a language should be a prerequisite for using a “language model”. I can see a future where this thinking gets eclipsed by a new mental model in a few years’ time, but for now, my variables project still requires the typing of commands and variables by hand.