Claude Shannon is a key figure in US computer science, electrical engineering, and a number of related fields. He was born in Petoskey, Michigan in 1916. His father was a business man, his mother a school principal, and his sister Margaret graduated from the University of Michigan with a masters in mathematics in 1932. Shannon as a kid delivered telegrams and newspapers, building model planes, a remote controlled boat, and simple telegraph system to a friend’s house.

Shannon graduated from the University of Michigan in 1936 with two degrees, in engineering and mathematics. That year he started work as a graduate student at Massachusetts Institute of Technology where he also worked on Vannever Bush’s differential analyzer, one of the first analog computers.

While working on the complicated circuits in Bush’s differential analyzer, Shannon realized the ideas of Boolean algebra, an equation theory based on two values, could be used to simplify the circuits. His masters thesis at MIT described how Boolean algebra could be applied to circuit design. His thesis is considered one of the most important masters thesis ever written due its later impact on computer science and technology development.

While studying at MIT, he also worked as a research fellow at the Institute for Advanced Study at Princeton where he studied ideas that led to his key contributions to digital communications theory. He had the opportunity to discuss his ideas with mathematicians and scientists including Hermann Weyl and John von Neumann. At times, Shannon also crossed paths with Albert Einstein and Kurt Gödel.

After his studies at MIT, in 1941, Shannon worked at Bell Telephone Laboratories where scientists were allowed to study any topic. During World War II, he worked at Bell Labs on fire-control systems and cryptography. For two months, he had contact with Alan Turing, the British cryptanalyst and mathematician, when Turing visited the US to share British methods of breaking German codes.

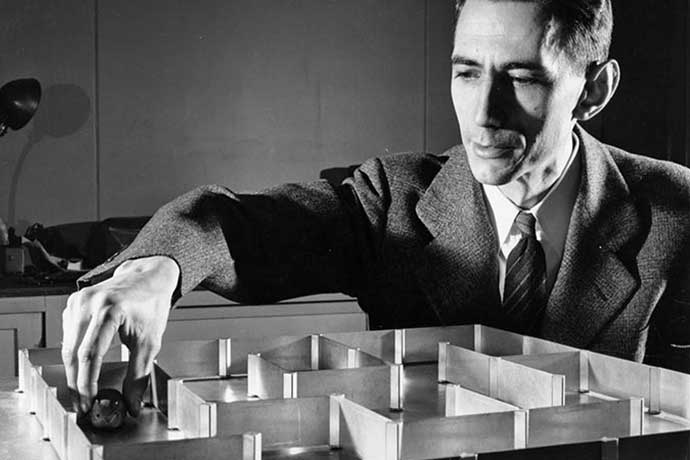

After the war, at Bell Labs, Shannon worked on digital communications theory, publishing a key work, A Mathematical Theory of Communications, which eventually led others to the development of microprocessors used in every computer, as well as networks. He also studied and wrote about cryptography, game theory, and machine learning. For example, in 1950, he built an electro-mechanical mouse and trained it to go through a maze of 25 squares based on its experience and memory of dead ends and open paths.

In 1956, Shannon returned to MIT to join their faculty and worked there until 1978. He eventually developed Alzheimers and passed away in 2001. According to his family, due to his disease, Shannon was largely oblivious to the explosion of public internet use and personal computing starting in the mid to late 1990s, all of it built on his work and insights into how to simplify the ad hoc circuits in the first computers.

What Did Shannon Contribute?

Claude Shannon’s great contribution is the idea all data can be reduced to one simple unit, either on or off. These units are called binary digits or bits. Imagine a world without bits. There would be no electronics as we know them, no mobile phones, no data storehouses, no internet. Shannon took an earlier idea developed by the French mathematician George Boole, about algebra and a theory using two values, and used it to describe data and how to quantify and measure the data that flowed across the AT&T telephone networks. Where others invented computers, Shannon formulated ideas to describe and quantify information, ideas that made possible computers and networks to distribute data.

In addition to his masters thesis and his paper, A Mathematical Theory of Communications, Shannon published many papers about his varied research projects at MIT and Bell Labs. He influenced, and was influenced by, many of the key scientists and mathematicians involved in creating computers and electronic communications in the 20th century.

Shannon also contributed to game theory. He and his wife traveled to Las Vegas frequently with Ed Thorp, an MIT mathematician, to play blackjack using theories Thorp developed with John Kelly, Jr, a physicist. They made a fortune and wrote a book about their experiences, with the data validated in the early 1960s. Next they applied Kelly’s formula to the stock market with better results. The formula has become a part of investment theory, used by Warren Buffet, Bill Gross, and many other successful investors. Shannon’s card-counting techniques for blackjack were written up in the book, Bringing Down the House in 2003.

Shannon also invented devices, for example, a box he had on his desk whose sole purpose was to turn itself off. Another device could solve the Rubik’s Cube puzzle. Along with Ed Thorp, Shannon also developed the first wearable computer.

One Interesting Detail

Shannon loved juggling, unicycling, and chess. In 1950, for example, he published a paper, Programming a Computer for Playing Chess, to describe how a computer could be made to play a decent chess game, using mathematics. He also demonstrated in this video some of the concepts he used to create a juggling machine:

An Interesting Footnote

Shannon was not the first to note Boolean algebra could be applied to electro-mechanical relay circuit design. Victor Shestakov, a Russian engineer at Moscow State University, made the discovery in 1935, two years earlier than Shannon. Both men presented their thesis in the same year, 1938. However, Shestakov’s thesis was not published until 1941 and then in Russian. Shannon’s publication in English, his work at MIT then Bell Labs, and his direct work with key people involved in the development of computers in the US, all combined to give the honor to Shannon. At the least, it shows great ideas often are distributed across societies. They’re rarely the result of a God-like inspiration by one person at one moment in time.

Learn More

Claude Shannon Biographies

http://www.technologyreview.com/featuredstory/401112/claude-shannon-reluctant-father-of-the-digital-age/

http://www.corp.att.com/attlabs/reputation/timeline/16shannon.html

http://www2.research.att.com/~njas/doc/shannonbio.html

http://en.wikipedia.org/wiki/Claude_Shannon

http://mediahistories.mit.edu/claudeshannon/about-claude/

Victor Shestakov

https://en.wikipedia.org/wiki/Victor_Shestakov

George Boole

http://en.wikipedia.org/wiki/George_Boole

http://en.wikipedia.org/wiki/Boolean_algebras_canonically_defined

http://en.wikipedia.org/wiki/Boolean_algebra

Claude Shannon: The Father of Information Theory (BBC 2012)

Claude Shannon Juggling (CBC 1985)

Claude Shannon Juggling (CBC 1985)

Claude Shannon’s Theseus Mouse and Maze

http://cyberneticzoo.com/?p=2552

http://museum.mit.edu/150/20